UPDATE 1: Added AutoRemote and openHAB REST API Integration (one way, commanding OH Items), implemented Lucky’s suggestion of having a “do this” hotword for custom commands.

UPDATE 2: Added MQTT subscription to get messages to announce. Also, with the release of the official Google Home Integration, I’ve removed the commands to turn ON/OFF the lights. They are no longer needed and before the AIY would often not recognize the “do this” preface to the command and respond with “those lights have not been set up yet” or some such. Annoying.

Update 3: Added code to control a Max7219.

This is the start of my adventures with a Google AIY Voice kit and integrations with my home automation. It’s a work in process but I wanted to capture my current thoughts before I forget them as this will probably be a weekslong project. I’ll update here as I make progress.

What is Google AIY Voice Kit

At its heart, it is a HAT for an RPi3 and some Python scripts to create a cardboard Google Home.

One advantage is that you can access the Google Cloud Voice API and create your own voice interface and everything is simple Python scripts so if you can do it in Python, you can create voice commands to control it.

The microphone that comes with it is amazing. It can hear and respond to me from anywhere I stand on that floor in my house. As long as there isn’t a closed door between me and the unit it will hear and respond. That alone makes it worth the $25 over a purely DIY. The HAT also has onboard “OK Google” detection so it does not depend on the Internet to recognize “OK Google” (i.e. it only sends audio to Google after you wake it up instead of always listening and transmitting to Google).

Finally, almost everything you can do from Google Assistant, you can do from the AIY. This includes integration with things you might have on your phone like AutoVoice which I was surprised about. There are lots of things it won’t do like read the news and it isn’t clear that sending streams to Chromcast and the like that won’t work. The speaker is a bit weak but there is plenty of room to attach an AMP and better speakers. When it is playing audio, it also is all but impossible to get it to recognize the “OK Google” hot word.

Goals

My main goal for this is to give a job to an old faux cathedral radio I picked up at a thrift shop. It is plenty large enough to make it easy to house everything, has an OK speaker, and looks kind of cool. I don’t really have a specific problem I’m trying to solve, I’m just exploring the possibilities.

So some goals I have to guide my experimentation include:

- basic Google Assistant features

- control home automation actuators by voice

- start playing Pandora or Google Music on the phone connected to the good speaker on the main floor

Assembly

The kit will come with a good build guide. Follow those instructions and the links in the guide. For now, the plan is to leave it in the cardboard box until I work out the functionality and then move it into the radio case.

Initial Software Configuration

Again, follow the instructions in the guide that came with the kit. Experiment with the included scripts. Read and play with the included examples as well. You will want to add billing to enable the Google Cloud Voice API but you get one free hour of recognition per month and additional time is pennies. But this could be a deal killer for some people and this is required to create your own voice commands (that don’t depend on your phone, more on that later).

In addition to the included instructions, I also set up VNC and SSH through the raspberry config desktop tool and I copied my ssh certs over so I can log in without my password. This lets me admin and use it remotely without a monitor and keyboard plugged in.

I also did some customizations that I like such as installing fish and setting it as my default shell and checking out my fish functions which let me update/upgrade all my Linux machines with one command and convenience features like that.

Initial experiments

First I played with basic Google Assistant behaviors. I and my son asked all sorts of questions from different distances away and loudness. We learned that one only need to use a slightly elevated voice from anywhere on the same floor as the device, even out of line-of-site, and it would hear and understand. I’m seriously impressed.

During these experiments, I thought I’d give a command to my Nest and to my surprise it worked! Out of the box, it already has the ability to control parts of my house. Next, I tried an AutoVoice command and that worked too! Since I already had AutoVoice Tasker integration with OH including some shortcuts to control the actuators in the house. So somehow it goes from the AIY to my phone to execute the commands and I’ve already basic integration with OH. Woohoo! However, I really don’t like the dependency on my phone, though I did confirm that it works even when I’m not on my home wifi.

The final goal is to be able to control the good speaker via voice. This speaker is a Bose speaker with an old android phone plugged into it. So to start I’m leveraging the AutoVoice integration, AutoRemote, and AutoInput. The AIY will trigger an AutoVoice profile on my phone which will send a message to my living room phone which triggers a Tasker profile on that phone to start up Pandora or Google Music (depending on the message) and using AutoInput to navigate through the various popups and screens to start the music actually playing (this is a bigger deal with Pandora than Google Music which, as the default music app responds to the media button emulation from Tasker). This is still a work in progress.

So far, everything described above is done without any modifications to the built-in AIY examples.

Lets make some changes

Power Control

It would be nice to be able to reboot and shutdown the AIY nicely. It is an RPi after all and pulling the plug is not nice. I also want to be able to do standard Google Assistant stuff and create my own voice commands at the same time. So looking around at some examples I’ve come up with the following which is based on the assistant_library_demo.py, cloudspeech_demp.py, and some examples I’ve found online:

#!/usr/bin/env python3

# Copyright 2017 Google Inc.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""Run a recognizer using the Google Assistant Library.

The Google Assistant Library has direct access to the audio API, so this Python

code doesn't need to record audio. Hot word detection "OK, Google" is supported.

The Google Assistant Library can be installed with:

env/bin/pip install google-assistant-library==0.0.2

It is available for Raspberry Pi 2/3 only; Pi Zero is not supported.

"""

import logging

import sys

import subprocess

import aiy.assistant.auth_helpers

import aiy.audio

import aiy.voicehat

from google.assistant.library import Assistant

from google.assistant.library.event import EventType

logging.basicConfig(

level=logging.INFO,

format="[%(asctime)s] %(levelname)s:%(name)s:%(message)s"

)

def power_off_pi():

aiy.audio.say('Good bye!')

subprocess.call('sudo shutdown now', shell=True)

def reboot_pi():

aiy.audio.say('See you in a bit!')

subprocess.call('sudo reboot', shell=True)

def process_event(assistant, event):

status_ui = aiy.voicehat.get_status_ui()

if event.type == EventType.ON_START_FINISHED:

status_ui.status('ready')

if sys.stdout.isatty():

print('Say "OK, Google" then speak, or press Ctrl+C to quit...')

elif event.type == EventType.ON_CONVERSATION_TURN_STARTED:

status_ui.status('listening')

elif event.type == EventType.ON_RECOGNIZING_SPEECH_FINISHED and event.args:

print('You said:', event.args['text'])

text =event.args['text'].lower()

if text == 'do this power off':

assistant.stop_conversation()

power_off_pi()

elif text == 'do this reboot':

assistant.stop_conversation()

reboot_pi()

elif event.type == EventType.ON_END_OF_UTTERANCE:

status_ui.status('thinking')

elif event.type == EventType.ON_CONVERSATION_TURN_FINISHED:

status_ui.status('ready')

elif event.type == EventType.ON_ASSISTANT_ERROR and event.args and event.args['is_fatal']:

sys.exit(1)

def main():

credentials = aiy.assistant.auth_helpers.get_assistant_credentials()

with Assistant(credentials) as assistant:

for event in assistant.start():

process_event(assistant, event)

if __name__ == '__main__':

main()

The big changes from the assistant_library_demo.py include:

- pass both the assistant with the event to process_event

- import subprocess and aiy.audio

- added functions that use subprocess to issue shutdown and reboot commands

- added the

elif event.type == EventType.ON_RECOGNIZING_SPEECH_FINISHED and event.argsclause to receive custom commands

As can be seen, adding new commands is really quite easy and since this is Python, it should be easy to make calls to OH’s REST API or MQTT or to use AutoRemote for Linux to send messages to the living room android to control the music, bypassing the dependency on my phone.

Commanding openHAB Items

This was so easy to implement I’m almost embarrassed.

I just added the following import and function.

import requests

def openhab_command(item, state):

url = 'http://ohserver:8080/rest/items/'+item

headers = {'Content-Type': 'text/plain',

'Accept': 'application/json'}

r = requests.post(url, headers=headers, data=state)

if r.status_code == 200:

aiy.audio.say('Command sent')

elif r.status_code == 400:

aiy.audio.say('Bad command')

elif r.status_code == 404:

aiy.audio.say('Unknown item')

else:

aiy.audio.say('Command failed')

Then I added the following elifs after the power and reboot commands:

elif text == 'do this lights on':

assistant.stop_conversation()

openhab_command("gLights_ALL", "ON")

elif text == 'do this lights off':

assistant.stop_conversation()

openhab_command("gLights_ALL", "OFF")

Since the AIY already has native integration with the Nest and I see no need to ask it to open my garage doors this is all I have for now. But it should be enough to get everyone started.

NOTE: With the official Google Assistance integration, these are not longer needed. But if you want to control something that is not currently supported I’ll leave these as examples.

Music

One of my goals is to control the old crappy phone I have connected to a Bose Soundlink III. This is a surprisingly good sounding speaker for its size with one major flaw. There is no way to disable the auto shutoff. 30 minutes of no audio and the speaker will simply shut off making the speaker unsuitable for the purposes I intend to put it. The quality of this speaker is largely the reason why I do not care to play music from the AIY itself.

So I have an old Android phone connected to the speaker and I have a Tasker profile set to trigger every 20 minutes and play a three second mp3 that consists of a 15 Hz tone. I chose 15 Hz because it is below the hearing range of humans, dogs and cats, and I couldn’t find a tone generator that would let me generate a tone above the hearing range of cats (64 KHz). I play this through the notification channel at volume 1 which I hope will keep the speaker awake without interrupting playing audio. I’ve not tested this yet though. Stay tuned.

I’m a heavy Tasker and Auto apps user on my main phone so I’m looking to control the audio on this media playing phone using Tasker and Auto Apps. In order to send messages to the phone, I’m using AutoRemote. AutoRemote gives your devices a unique URL you can use with arguments. So all I have to do from the AIY script is to send an HTTP GET with the message and on the phone have a Tasker profile that triggers on that message. This will let me control anything that Tasker can control on the phone from the AIY.

I added the following functions:

def autoremote(message):

r = requests.get(autoremote_url+message)

def play_pandora():

aiy.audio.say('Playing some Pandora')

autoremote("play%20pandora")

def play_google_music():

aiy.audio.say('Playing some Google Music')

autoremote("play%20google%20music")

def stop_music():

aiy.audio.say('Stopping the music')

autoremote("stop%20music")

def volume(direction):

aiy.audio.say('Volume ' + direction)

autoremote("volume%20"+direction)

autoremote is a variable containing the AutoRemote URL for the old phone and the message uses URL escaped characters for spaces and other special characters.

And some elifs to process the custom commands:

elif text == 'do this play pandora':

assistant.stop_conversation()

play_pandora()

elif text == 'do this play google music':

assistant.stop_conversation()

play_google_music()

elif text == 'do this stop music':

assistant.stop_conversation()

stop_music()

elif text == 'do this volume up':

assistant.stop_conversation()

volume("up")

elif text == 'do this volume down':

assistant.stop_conversation()

volume("down")

That is it from the AIY side of things. On the phone, I use Tasker profiles triggered by the messages I send using AutoRemote.

Starting and controlling Google Music is pretty easy. It responds to the native Tasker Media Button commands so I just need to start it and issue a Play command and it will start playing the last channel or playlist.

Pandora is a pain in the butt and I’ve not come up with a good approach yet for stopping it. Right now I use AutoInput to pull down the notifications and press the X on the notification. I found the X,Y coordiantes by trial and error.

The rest of the controls are pretty easy as they are just volume adjustments and such.

MQTT Announcements

As with REST API commanding of Items, adding subscription was super easy as well. I added the following to the script:

import paho.mqtt.client as mqtt

...

# MQTT

announceTopic = "aiy/fr/announce"

username = "mqttUserName"

password = "mqttPassword"

lwtTopic = "aiy/fr/lwt"

lwtMsg = "family room aiy is down"

host = "mqttBrokerAddress"

port = 1883

def onMessage(client, userdata, msg):

print(str(msg.payload))

aiy.audio.say(str(msg.payload)) # use the AIY TTS to speak the message

def onConnect(client, userdata, flags, rc):

client.subscribe(announceTopic)

def onDisconnect(client, userdata, rc):

"""Do nothing"""

I just put this at the top of the file just below the imports. There is probably room for improvement here. For example, I’m pretty sure that if the broker goes down this script will need to be restarted because I don’t have the code in place to reconnect properly (I could be wrong on that and the client handles that, I’ve not tested it yet).

On the OH side I just have

String aAnnounce "Radio Announce" { mqtt=">[mosquitto:aiy/fr/announce:command:*:default]" }

However, I have turned this feature off. To quote my wife, “I don’t need this thing announcing to the whole house when I already get all these alerts on my phone.”

When I manage to get a doorbell alert set up I will probably reenable this to play a chime through the AIY when the bell is rung.

MAX7219 Display

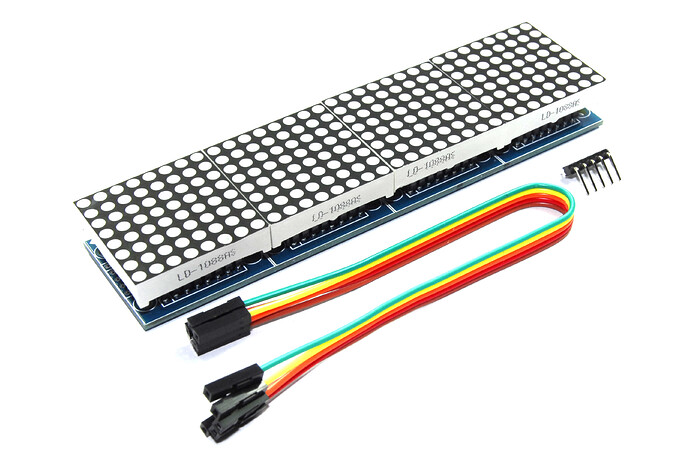

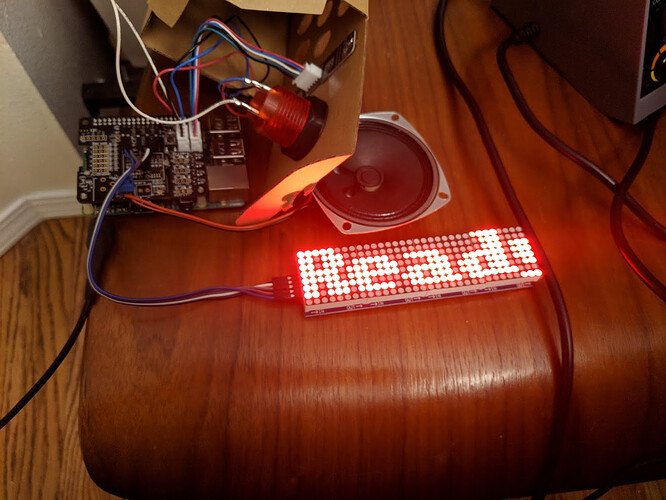

So the old radio case I plan on installing all of this into had a cassette tape deck in it which left a hole in the side. I need something to plug that hole. By chance, while looking for an LCD touchscreen to fill the space I happened across a MAX7219 display on Wish. For $4 I thought I’d give it a shot. The device is a 4 cascade of 8x8 red led pixels that is the perfect height and just a little longer than needed to plug the hole.

Thankfully all of the GPIO pins necessary are available on the AIY HAT. Cut three of the pins off the set of five that came with the set and solder them to:

- 1 to the 5v hole on the hat

- 1 to the GND hole on the hat

- 1 to the CEO hole on the hat

- the remaining two to the MOSI and CLK holes on the hat.

The attach the wires to:

| MAX7219 | AIY HAT |

|---|---|

| VCC | 5V |

| GND | GND |

| DIN | MOSI |

| CS | CEO |

| CLK | CLK |

Then follow the instructions here to install the libraries necessary to run the display. I noticed that when the RPi first started up after wiring up the MAX7219 it barely had enough power to keep running as the display lit up all the LEDs at full brightness. However, once you run the demo the MAX7219 appears to draw little enough power to not require a separate power supply under normal usage. If you run into problems though, you can adjust the brightness level so it sucks up less power.

Once I got the demo to work (NOTE: -90 orientation was the trick for the particular device i received) I added the following code to the script:

from luma.led_matrix.device import max7219

from luma.core.interface.serial import spi, noop

from luma.core.legacy import show_message

from luma.core.legacy.font import proportional, CP437_FONT

serial = spi(port=0, device=0, gpio=noop())

device = max7219(serial, cascaded=4, block_orientation=-90)

msg = "Starting up..."

print(msg)

show_message(device, msg, fill="white", font=proportional(CP437_FONT))

Added near the top. Then I added show_message to each of the functions that implement “do this” functions and other sections of process_event to gets some visual feedback on what the script is doing. For example:

elif event.type == EventType.ON_END_OF_UTTERANCE:

status_ui.status('thinking')

show_message(device, 'Thinking', fill="white", font=proportional(CP437_FONT))

Lessons Learned

- Google Assistant commands will take precedence over custom commands. So, for example, “Shutdown” can’t be used because that is a command Google Assistant recognizes and you will get a response that power controls are not yet supported on this device. It is not yet clear to me whether Assistant Shortcuts takes precedence over custom commands too, but I suspect the answer is yes.

- The Voice HAT cannot recognize the “OK Google” hot word while the speaker is playing. If you want to use the same speaker to play media you will need to include the button trigger and stop playing the media when the button is pressed.I’m hoping when I put this into the case the microphones will be far enough from the speaker to be able to hear

- The microphones are amazing for a $25 DIY kit

- The kit does a lot for you. It will take a good deal of research to see how it all really works versus running magic scripts to make things work.

- Ask the family before having the AIY start announcing messages.

- I find that the script often loses connection to Google after awhile. I’m not sure why but a cron job to restart the script every 12 hours or so seems to mitigate the problem.

To Do

- generate and speak summary information about OH’s state

- finish Tasker/AutoRemote control of the audio controls

- refactor the Python code using better coding practices

- put the AIY into its case with some extra leds, push button, and some sort of display

Conclusion

It is too soon to come up with a full conclusion but, at this point, I would say it is worth the cost to get the kit versus going fully DIY given the quality of the microphones. More to follow as I make progress.

Full Script in Progress

#!/usr/bin/env python3

# Copyright 2017 Google Inc.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""Run a recognizer using the Google Assistant Library.

The Google Assistant Library has direct access to the audio API, so this Python

code doesn't need to record audio. Hot word detection "OK, Google" is supported.

The Google Assistant Library can be installed with:

env/bin/pip install google-assistant-library==0.0.2

It is available for Raspberry Pi 2/3 only; Pi Zero is not supported.

"""

import logging

import sys

import subprocess

import requests

import paho.mqtt.client as mqtt

import aiy.assistant.auth_helpers

import aiy.audio

import aiy.voicehat

from google.assistant.library import Assistant

from google.assistant.library.event import EventType

from luma.led_matrix.device import max7219

from luma.core.interface.serial import spi, noop

from luma.core.legacy import show_message

from luma.core.legacy.font import proportional, CP437_FONT

serial = spi(port=0, device=0, gpio=noop())

device = max7219(serial, cascaded=4, block_orientation=-90)

msg = "Starting up..."

print(msg)

show_message(device, msg, fill="white", font=proportional(CP437_FONT))

# Config Parameters

autoremote_url = "https://autoremotejoaomgcd.appspot.com/sendmessage?key=APA91bERqJRCIQ5oSDhPxaxC-EwyG_xRgJpO_d3BtzvVzFh1mZkCzS6nCWPUw1czYRuuOtC49XvBx6wP7lpq2dnfEPBDNhkz3GOu7fwWeGMQDnQp4nrzbyBPTHe1oOz6p1rdh-mnuQ4S&message="

# MQTT

announceTopic = "aiy/fr/announce"

username = "rich"

password = "hes10d27"

lwtTopic = "aiy/fr/lwt"

lwtMsg = "family room aiy is down"

host = "argus.koshak.net"

port = 1883

def onMessage(client, userdata, msg):

print(str(msg.payload))

aiy.audio.say(str(msg.payload))

def onConnect(client, userdata, flags, rc):

client.subscribe(announceTopic)

def onDisconnect(client, userdata, rc):

"""Do nothing"""

client = mqtt.Client("radio")

client.on_connect = onConnect

client.on_disconnect = onDisconnect

client.on_message = onMessage

client.username_pw_set(username, password)

client.will_set(lwtTopic, lwtMsg, 0, False)

client.connect(host, port, 60)

client.loop_start()

logging.basicConfig(

level=logging.INFO,

format="[%(asctime)s] %(levelname)s:%(name)s:%(message)s"

)

def power_off_pi():

aiy.audio.say('Goodbye!')

show_message(device, 'Goodbye!', fill="white", font=proportional(CP437_FONT))

subprocess.call('sudo shutdown now', shell=True)

def reboot_pi():

aiy.audio.say('See you in a bit!')

show_message(device, 'Rebooting', fill="white", font=proportional(CP437_FONT))

subprocess.call('sudo reboot', shell=True)

def autoremote(message):

r = requests.get(autoremote_url+message)

show_message(device, 'Autoremote', fill="white", font=proportional(CP437_FONT))

def openhab_command(item, state):

url = 'http://argus.koshak.net:8080/rest/items/'+item

headers = {'Content-Type': 'text/plain',

'Accept': 'application/json'}

r = requests.post(url, headers=headers, data=state)

if r.status_code == 200:

aiy.audio.say('Command sent')

show_message(device, 'Command sent', fill="white", font=proportional(CP437_FONT))

elif r.status_code == 400:

aiy.audio.say('Bad command')

show_message(device, 'Bad command', fill="white", font=proportional(CP437_FONT))

elif r.status_code == 404:

aiy.audio.say('Unknown item')

show_message(device, 'Unknown Item', fill="white", font=proportional(CP437_FONT))

else:

aiy.audio.say('Command failed')

show_message(device, 'Command failed', fill="white", font=proportional(CP437_FONT))

def play_pandora():

aiy.audio.say('Playing some Pandora')

autoremote("play%20pandora")

show_message(device, 'Pandora', fill="white", font=proportional(CP437_FONT))

def play_google_music():

aiy.audio.say('Playing some Google Music')

autoremote("play%20google%20music")

show_message(device, 'Google Music', fill="white", font=proportional(CP437_FONT))

def stop_music():

aiy.audio.say('Stopping the music')

autoremote("stop%20music")

show_message(device, 'Stopping music', fill="white", font=proportional(CP437_FONT))

def volume(direction):

aiy.audio.say('Volume ' + direction)

autoremote("volume%20"+direction)

show_message(device, 'Music volume '+direction, fill="white", font=proportional(CP437_FONT))

def process_event(assistant, event):

status_ui = aiy.voicehat.get_status_ui()

if event.type == EventType.ON_START_FINISHED:

status_ui.status('ready')

if sys.stdout.isatty():

print('Say "OK, Google" then speak, or press Ctrl+C to quit...')

show_message(device, "Ready", fill="white", font=proportional(CP437_FONT))

elif event.type == EventType.ON_CONVERSATION_TURN_STARTED:

status_ui.status('listening')

show_message(device, "Listening", fill="white", font=proportional(CP437_FONT))

elif event.type == EventType.ON_RECOGNIZING_SPEECH_FINISHED and event.args:

print('You said:', event.args['text'])

text =event.args['text'].lower()

if text == 'do this power off':

assistant.stop_conversation()

power_off_pi()

elif text == 'do this reboot':

assistant.stop_conversation()

reboot_pi()

elif text == 'do this play pandora':

assistant.stop_conversation()

play_pandora()

elif text == 'do this play google music':

assistant.stop_conversation()

play_google_music()

elif text == 'do this stop music':

assistant.stop_conversation()

stop_music()

elif text == 'do this volume up':

assistant.stop_conversation()

volume("up")

elif text == 'do this volume down':

assistant.stop_conversation()

volume("down")

# No longer needed with Official Google Assistant Support, kept for reference

# elif text == 'do this lights on':

# assistant.stop_conversation()

# openhab_command("gLights_ALL", "ON")

# elif text == 'do this lights off':

# assistant.stop_conversation()

# openhab_command("gLights_ALL", "OFF")

# else:

# aiy.audio.say('I do not understand the command ' + text)

elif event.type == EventType.ON_END_OF_UTTERANCE:

status_ui.status('thinking')

show_message(device, 'Thinking', fill="white", font=proportional(CP437_FONT))

elif event.type == EventType.ON_CONVERSATION_TURN_FINISHED:

status_ui.status('ready')

show_message(device, 'Ready', fill="white", font=proportional(CP437_FONT))

elif event.type == EventType.ON_ASSISTANT_ERROR and event.args and event.args['is_fatal']:

sys.exit(1)

def main():

credentials = aiy.assistant.auth_helpers.get_assistant_credentials()

with Assistant(credentials) as assistant:

for event in assistant.start():

process_event(assistant, event)

if __name__ == '__main__':

main()

Ugh, this is getting ugly. I’ll need to start refactoring soon.