Hello all,

This small tutorial explains some of the basics of setting up a log and system monitoring environment. I use this in case of issues with my openhab setup, to analyse what went wrong, when to find a solution. It’s difficult to do this from the text files and sometimes openhab stops being happy due to platform issues (low on RAM). So that’s why I integrated both log and platform monitoring in a grafana dashboard. It’s not easy to figure out all the steps, so I wanted to share my experience and hope other can benefit from it.

I do log monitoring with:

I do system monitoring with:

- Node exporter as a platform (ex: raspberry pi) metrics (available ram) exporter

- Prometheus as metrics scraper and ‘database’

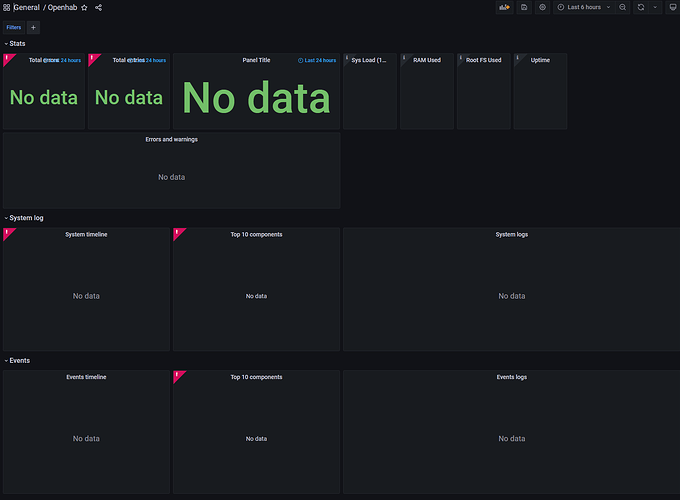

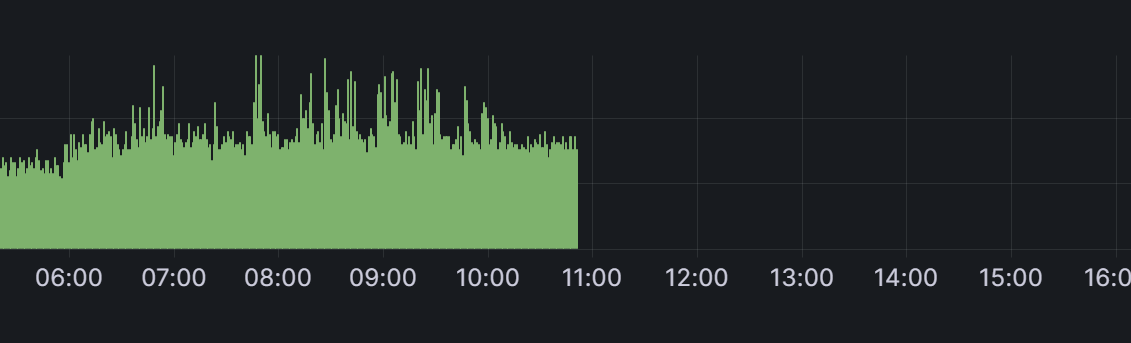

To start this topic with some graphics, this is my dashboard in Grafana:

Note: I’m currently migrating from textual item, rule and thing definitions to UI so I got some errors to tackle. But that just makes the visuals more interesting ![]()

I’m not going to go over the details on how to install the separate components. There are dedicated articles about this on the web. I would however recommend installing these (and openhab for that matter) as a docker container. This topic is specifically meant to explain the integration of openhab with the other components.

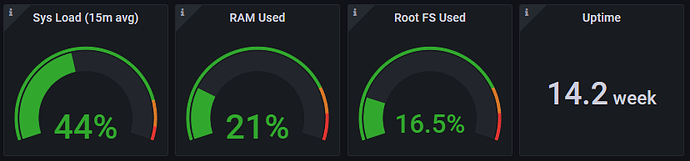

There is no configuration specific to openhab for Node exporter and prometheus. So you should be able to install and configure these components using internet sources. When successfull, these indicators from my dashboard are ready to put in your dashboard:

Same goes for Loki.

Promtail needs access to the openhab.log and events.log files. I run this component in docker and mount the user data volume from my openhab docker container into the promtail container (in the /logs folder in promtail container). Then you need a configuration file for promtail in order to create a job for each file and tell promtail how to parse the log file lines. This is my configuration:

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://server.running.loki:3100/loki/api/v1/push

scrape_configs:

- job_name: openhab-events

pipeline_stages:

- regex:

expression: '^(?P<time>.+) \[(?P<level>[a-zA-Z]+) ?\] \[(?P<component>[a-zA-Z \.]+)\] - (?P<output>.+)$'

- labels:

level:

component:

- timestamp:

format: "2021-06-22 15:04:03.828"

source: time

- output:

source: output

static_configs:

- targets:

- localhost

labels:

job: openhab-events

__path__: /logs/logs/events.log

- job_name: openhab-system

pipeline_stages:

- multiline:

firstline: '^(?P<time>.+) \[(?P<level>[a-zA-Z]+) ?\] \[(?P<component>[a-zA-Z \.]+)\] - (?P<output>.+)$'

max_wait_time: 1s

- regex:

expression: '^(?P<time>.+) \[(?P<level>[a-zA-Z]+) ?\] \[(?P<component>[a-zA-Z \.]+)\] - (?P<output>.+)$'

- labels:

level:

component:

- timestamp:

format: "2021-06-22 15:04:03.828"

source: time

- output:

source: output

static_configs:

- targets:

- localhost

labels:

job: openhab-system

__path__: /logs/logs/openhab.log

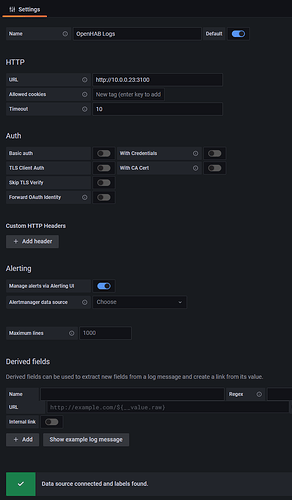

As for Grafana. After installation and basic setup, the first thing you need to do is configure a data source for Loki and one from Pormetheus. This is as simple a creating a data source and inserting the URL to both components. Now your Grafana has access to the data that is scraped.

And now for the dashboard. Start by doing something simple as importing an existing template into grafana for the node exporter part. I would advise this one: Node Exporter Full dashboard for Grafana | Grafana Labs

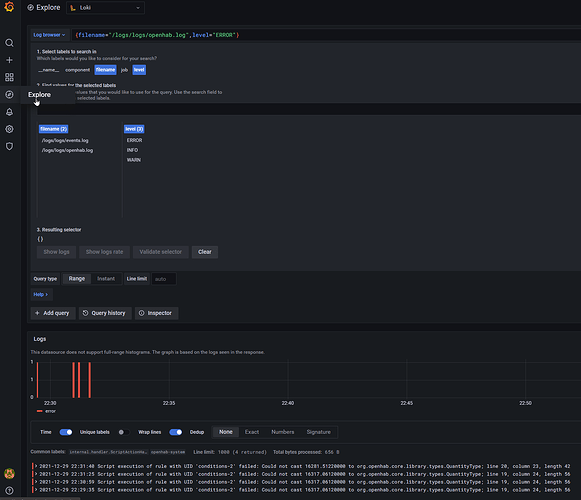

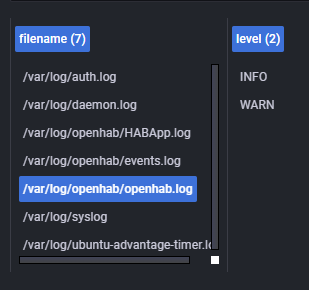

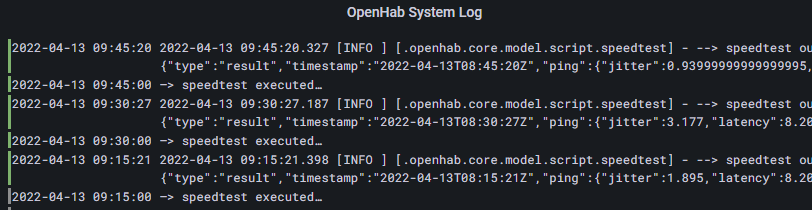

You should be able to explore your openhab data and do simple queries like this:

If you want to copy my dashboard. Here is the json template:

Grafana openhab dashboard.json (25.2 KB)

Grafana also allows for alerting when certain numbers go beyond thresholds. I haven’t gotten to that part yet.