What / Why / the Ultimate Goal

To have completely self hosted and private multi-room voice control of openHAB, using almost completely F/LOSS software and hardware[1], and without relying on any “cloud” services nor multinational companies who seem to be in the business of gathering all your data like when you are home or not, speaking privately to your wife, etc. (which is very creepy).

Note 1: the only thing I think that is not would be the RPi bootloader (if you are using RPi), but there are hardware alternatives.

Oh, that can never happen (you say)!

![]()

Background

I recently received my ReSpeaker USB Mic Array which I had planned on using with snips.ai. Today I set out to implement this, and brushing up on my reading again, learned that Snips sold out to Sonos and will be discontinuing console access, essentially rendering it useless for our purposes.

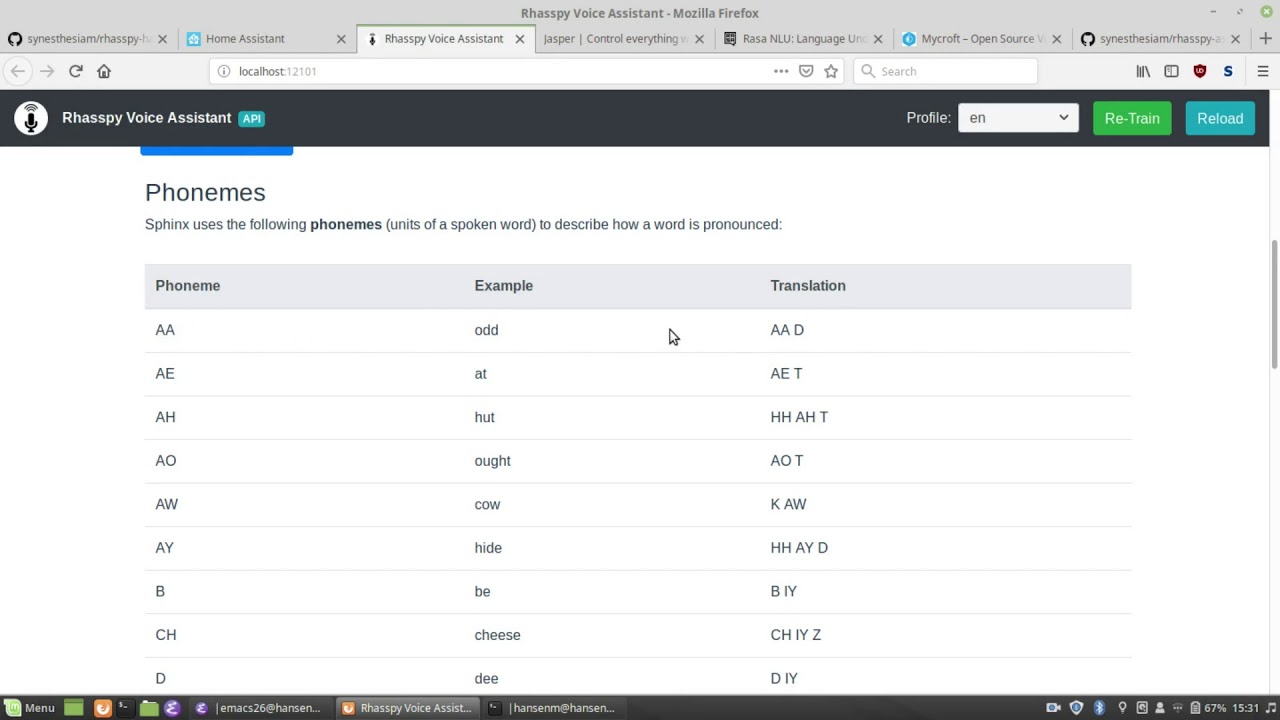

After reading that thread (and others) and doing some further research, it seems to me that at this time Rhasspy seems to be by far the most feature complete and stable alternative out there right now, and also seems to be actually completely F/LOSS (unlike Snips, who never really fully released all their source code). In short, I feel confident enough to invest my time moving forward with this solution.

Progress Thus Far

In production I plan on having a number of satellites (one in each room) on lower powered devices streaming the audio to a central, more powerful SBC (ex. ODROID-XU4, etc.) doing the heavy lifting (STT, training, and intent recognition). But for now I am just trying to get this working on my plain amd64 Debian Buster desktop first.

I plugged the ReSpeaker USB Mic Array in to my desktop, and it immediately appeared in Pulse Audio Volume Control (yay for truly F/LOSS/H!).

I don’t use Docker, nor Home Assistant (Hass.io), so I installed Rhasspy into a Python virtual environment. This went pretty seamlessly, and after fiddling with a few settings in the web interface and PAVC I was pretty quickly training Rhasspy by writing sentences. The default recognition was actually pretty good right out of the box with default settings.

Next I started trying to get these intents into OpenHAB. It seemed to me that publishing to MQTT might be the easiest way. But I got so lost in trying to parse the JSON that my head now hurts and I come here for help. I even installed MQTT Explorer along the way, and I am pretty sure I have the correct topic (hermes/intent/DeskLights for instance, as set in Rhasspy).

I have searched and read several forum posts, the gist of it seems to be to create a text item to receive the MQTT JSON payload, and then parse that and then make a rule to update something else? That seemed overly complicated to me.

The first item I thought I would try and control was a simple light switch (via MySensors binding). But I couldn’t seem to figure out how to simply connect the two.

Taking a Strategic Step Back

I also wonder if I should maybe just have Rhasspy do the Speech To Text, and then let OpenHAB’s Rule Voice Interpreter parse the text? I think there is a way to just send the text somehow from Rhasspy.

Not sure what the best way forward is at this point? Any help would be greatly appreciated. And with Snips.ai recent sale to Sonos, I don’t think I will be the only one. In fact I was a little surprised not to find a thread about this already. So I am sure if we are able to figure something out, that others would also be helped.